A Better Way to Search with AI for Domain-Specific Information (and The Value of LLMs That Don't Always Answer)

There are a lot of large language model (LLM) chatbots like ChatGPT, Claude, or Google Gemini, that offer answers. The problem is that they are biased toward answering even when there is insufficient information, so they make stuff up (sometimes called “hallucinations”). Or, they give us they answer they think we want to hear, rather than the answer supported by the evidence (sometimes called “sycophancy”). That’s why my favorite feature of Dewey, and the header for this article, is Dewey’s non-answer.

Emily Oster, an economist and author who founded ParentData similarly wrote resources that were helpful to my wife and me when we were starting to have kids. The pregnancy book Expecting Better, and then Oster’s website Parent Data have been part of a healthy variety of helpful resources (including books, websites, our pediatrician, family, and friends).

This year, Oster's website launched a chatbot feature. I was immediately curious, and did some digging to understand what was behind it. I have liked Oster's work, but I was concerned about the potential for AI hallucinations providing inaccurate information related to parenting, given the other areas where I have pointed out concerns with AI misuse in law and factual errors in AI search engines like Google AI Overview and Perplexity.

What I found was LLM-powered search that points in a direction that I hope could be a better way for future LLM use for both writers and consumers:

- For writers, what you want to do is write. Unfortunately, “the algorithms” promote a cult of new. So you write “the thing” and then you have to constantly post about it. For every primary thing you write, how much more time is spent making sure anyone discovers it? With LLM-powered search, people can find your quality evergreen content that addresses their questions. The deep dives are worth it.

- For consumers, you don’t want to have to guess the exact right grouping of words to type into a search to find your answer. You want to tell it the kind of thing you want to know about and have it bring you answers. LLMs can be great at that. What you may get with other LLM search is something more like: good sources + bad sources + possibly groundless hallucinations mixed together with possible misunderstandings and summarized as the warmed over average of all that. Dewey is grounded in an expert’s writing, bringing you only clearly sourced answers.

I spoke with one of the founders of Dewey earlier this year. I do not have any affiliation with Dewey, do not receive any compensation based on this post, and provide this evaluation independently as a professional and as a parent based on my personal opinions.

Another AI Option with ParentData and Dewey

I know there are parents out there who want absolutely nothing to do with AI. I like the books Tech-Wise Family and The Anxious Generation, both of which have been very influential in reducing technology use in our own family and in our community this past year. So you might be surprised that I’m recommending an AI tool. But the reason I like Dewey is that I believe it is making the internet less crappy by using AI responsibly to help parents get to well-written information and use their internet time better.

Examples of Dewey Normal Response and Rejection

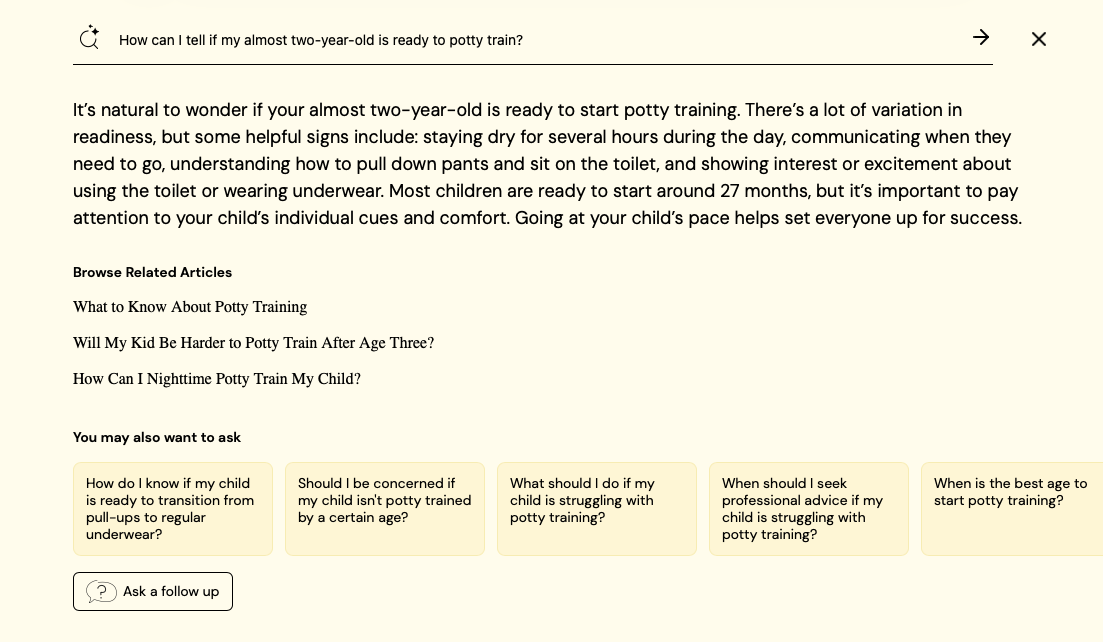

Basic Dewey interface: Answer, articles, suggestions

- The Dewey-powered chat in Parent Data generates:

- a chat-based response [certain queries may not generate a response]

- links to top related articles (semantic search)

- suggested follow-up questions

"Dewey" also refrains from answering questions.

- Importantly, the Dewey chatbot does not answer questions that are outside the expert’s wheelhouse.

- If a question is asked for which an answer does not exist, the query is shared with the website owner’s team to review and see if this is an information gap they should fill by writing new content. (For my example I asked a question about running an LLM locally on my computer, so not something ParentData would want to cover).

Find more quality content

How might Dewey help make the internet less crappy? Good writers should not be churning out “content” with clickbait headlines and hot takes meant to get angry engagement. And they shouldn’t like algorithms that reward that kind of writing. Instead, writers should try to write things that matter and will hold up. For example, Emily Oster has an extensive catalogue of thoughtful, well-researched writing on parenting topics. You may or may not agree with everything she’s written, but it’s clear she’s tried to write from a place of intellectual honesty.

But how will that kind of writing be rewarded if readers never find it? The current social media model means writers have to constantly post short-form junk to drive traffic to their real work. Even the most writer-focused social media platform, Substack, is becoming more like that all the time.

Search on the internet seems to be getting worse. Social media websites penalize external links (that’s why Twitter/X, Facebook, and LinkedIn often have the link as a comment on the original post). People are making their websites harder to access so their work won’t be scraped to train AI models that then compete with their own work (e.g., travel bloggers, recipe creators). Google Search with AI Overview and the AI chatbots may be sending less referral traffic to content creators.

Dewey, on the other hand, has explicit permission, because it is deployed on the website of the content creator. Writers with the best range of high-quality, evergreen content would benefit the most from a tool like Dewey. Based on our experience up to this point, I think Emily Oster a responsible person that I trust to engage wisely with AI.

I have also spoken with Alexis Tryon, one of the founders of Dewey Labs, and I think the vision she and her husband have for the company is solid. AI-as-librarian: helping the user track down relevant information through semantic search, is not as exciting as a genius chatbot that knows everything. But given the problems I’ve seen in legal citations and academic citations with large language model hallucinations, I think it’s important to encourage approaches to generative AI that involve more grounding of facts in specific sources.

Midwest Frontier AI Consulting does not have any affiliation with Emily Oster, ParentData, or Dewey Labs. This is review is based on my professional opinion as a generative AI consultant and a parent. I received no compensation for this review and do not benefit from anyone choosing to use ParentData or Dewey.

Why General Purpose LLMs and AI Search Engines Sometimes Fail

While I can understand parents using ChatGPT occasionally, I do not think a general-purpose chatbot like ChatGPT is well-suited to most parenting questions. There are multiple problems, including AI hallucinations (making things up), sycophancy (AI wanting to tell you what you want to hear), outdated information, and lack of direction. I’ve written about the first two problems elsewhere, so I’ll focus on the outdated information and lack of direction.

Failure to Update

Consider the situation many first-time parents face. You leave the hospital, strap the baby in the carseat for the first time (did I do that right?). And then think: “Why’d they let us leave? We don’t know what we’re doing.” You try to remember the advice like “back to sleep.”

Eventually, you get visitors, including older friends and relatives. They can tell you’re tired. They give advice on soothing the baby like giving them a “blankey” or laying them on their tummy. “Nope, that’s not actually what the safe sleep guidelines say.” These folks are sharing what were considered best practices and have not updated on the literature or had the personal experience of SIDS.

Similarly, you may have people tell you to avoid giving an infant peanut butter, based on the old, flawed advice that likely resulted in the high rates of peanut allergies. When our oldest was born, the clinical guidance recommending early exposure had only recently changed. It took time for that information to spread through society and if ChatGPT and other LLMs had been around then, they might have give outdated information as well.

Unfortunately, generative AI can work the same way. Sometimes, in law, another topic I write about, AI will suggest citing a legal precedent that was overturned by later decisions. At a basic level, it may do this because there are many more examples of the precedent being cited than the more recent case that overturned it.

In law, what matters is the more recent case and the rulings made after that decision. But the AI doesn’t necessarily understand that. The same could be said for scientific research. The latest study might end up changing what is considered the prevailing wisdom. But the AI has more examples of the old concept.

Self-directed v. guided learning

ChatGPT also does not give you much guidance. It’s a powerful general purpose tool, but what questions should you even be asking? Typically, new parents learn things from other parents, friends, and professionals that we would never even have considered.

For example, healthcare professionals tell us “it is not safe for an infant to sleep with a teddy bear with beady eyes.” If I ask ChatGPT if this is safe, it says “Short answer: No — it’s not considered safe for an infant to sleep with a teddy bear that has beady/plastic eyes.” It goes on to explain that it’s a choking hazard. But as a first-time parent, I would never have thought to ask that to begin with. I needed someone to direct my learning as a new parent by pointing out risks I hadn’t considered.

Books can also give us a structured way to approach information. They do not just contain information, but tells us what to learn in what order. Sometimes we don’t want to be able to do anything. We want constraints. We want someone to make us learn only the specific things we need to know for our kids at their current ages and developmental levels. If I have a 24-month old who is walking and saying two-word sentences, I no longer care about what 20-month olds or crawlers or one-word kids do. I’m not ready yet for two-and-a-half. One day at a time.