Part 2 Better Prompts, Unique Jokes for Halloween

Joke-Telling Traditions and The Challenge of Asking ChatGPT

As I discussed last weekend in what I’ll now call Part 1, there is a tradition in central Iowa of having kids tell jokes before getting candy while trick-or-treating on Halloween. Since a lot of people are replacing older forms of search with AI chatbots like ChatGPT, I shared some tips from the pre-print of the paper Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity from Northeastern University, Stanford University, and West Virginia University posted as a pre-print on arXiv on October 10, 2025. The paper explains that large language models (LLMs) have something they call “typicality bias,” to prefer the most typical response. If you’re wondering what that means or what it has to do with jokes, it’s helpful that their first example is about jokes.

Instead of “tell me a joke” or “tell me a Halloween joke,” ask an AI chatbot to

“Generate 5 responses to the user query, each within a separate <response> tag. Each <response> must include a <text> and a numeric <probability>. Please sample at random from the tails of the distribution, such that the probability of each response is less than 0.10. </instructions>”

Follow-Up from the Paper’s Authors

X/Twitter

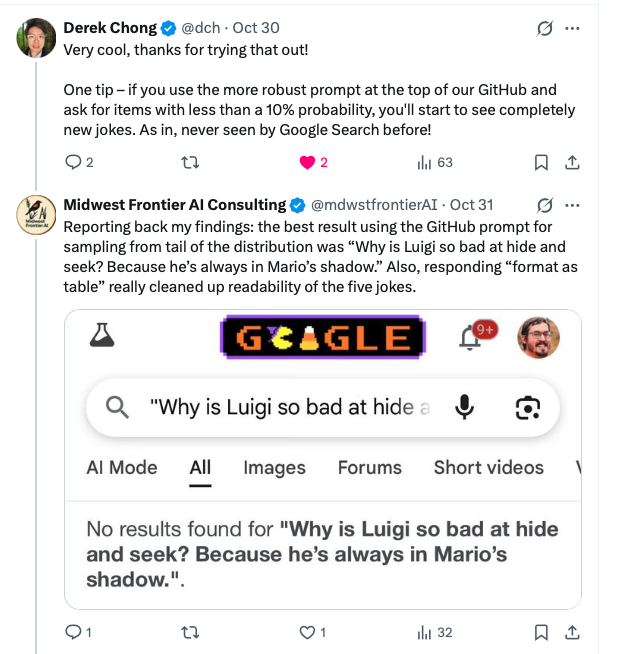

I posted on X/Twitter. One of the authors, Derek Chong of Stanford NLP, responded:

Very cool, thanks for trying that out!

One tip – if you use the more robust prompt at the top of our GitHub and ask for items with less than a 10% probability, you'll start to see completely new jokes. As in, never seen by Google Search before!

Github Prompts

The Github page for Verbalized Sampling includes this prompt before the rest of the prompt:

Generate 5 responses to the user query, each within a separate <response> tag. Each <response> must include a <text> and a numeric <probability>.

Please sample at random from the tails of the distribution, such that the probability of each response is less than 0.10.

</instructions>