tl;dr if you ask one AI, like ChatGPT or Claude or Gemini something, then double-check it on a search engine like Google or Perplexity, you might get burnt by AI twice. The first AI might make something up. The second AI might go along with it. And yes, Google Search includes Google AI Summary now, which can make stuff up.

To subscribe to law-focused content, visit the AI & Law Substack by Midwest Frontier AI Consulting.

In re: Turner, Disbarred Attorney and Fake Cases

Iowa Supreme Court Attorney Disciplinary Board v. Royce D. Turner (Iowa)

In July 2025, the Iowa Supreme Court Attorney Disciplinary Board moved to strike multiple recent filings by Respondent Royce D. Turner, including Brief in Support of Application for Reinstatement, because they contained references to a non-existent Iowa case. Source 1

There was subsequently a recent Iowa case, Turner v. Garrels, in which a pro se litigant named Turner misused AI. This is a different individual.

Several of Respondent’s filings contain what appears to be at least one AI-generated citation to a case that does not exist or does not stand for the proposition asserted in the filings. —In re: Turner

The Board left room with “or does not stand for the proposition,” but it appears that this was straightforwardly a hallucinated fake case cited as “In re Mears, 979 N.W.2d 122 (Iowa 2022).”

Watch out for Doppelgänger hallucinations!

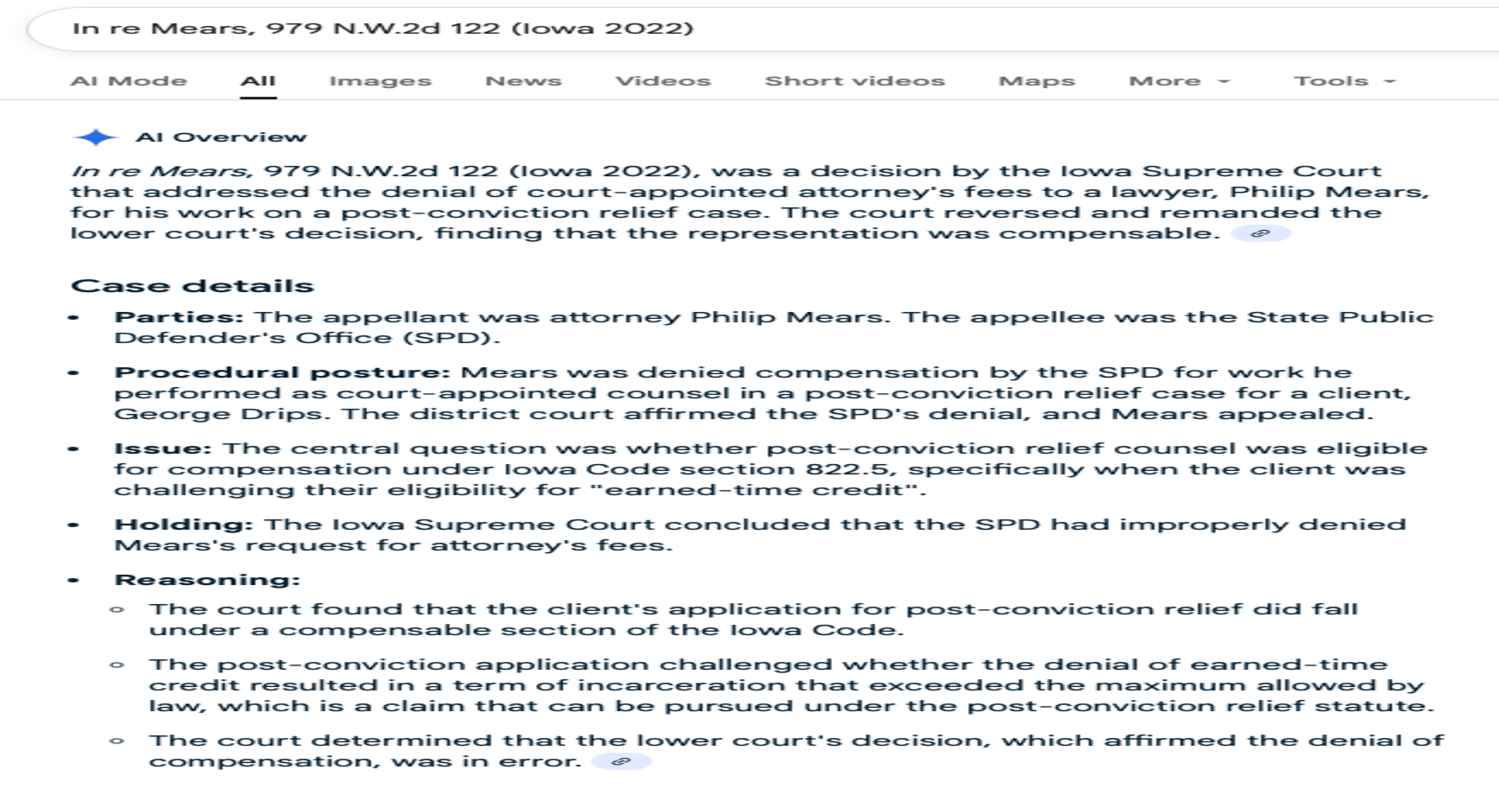

I searched for the fake case title “In re Mears, 979 N.W.2d 122 (Iowa 2022)” cited by Turner to see what Google results came up. What I found was Google hallucinations seeming to “prove” that the AI-generated case title from Turner referred to a real case. Therefore, simply Googling a case title is not sufficient to cross-reference cases, because Google’s AI Overview can also hallucinate. As I have frequently mentioned, it is important for law firms that claim not to use AI to understand that many common and specialist programs now include generative AI that can introduce hallucinations, such as Google, Microsoft Word, Westlaw, and LexisNexis.

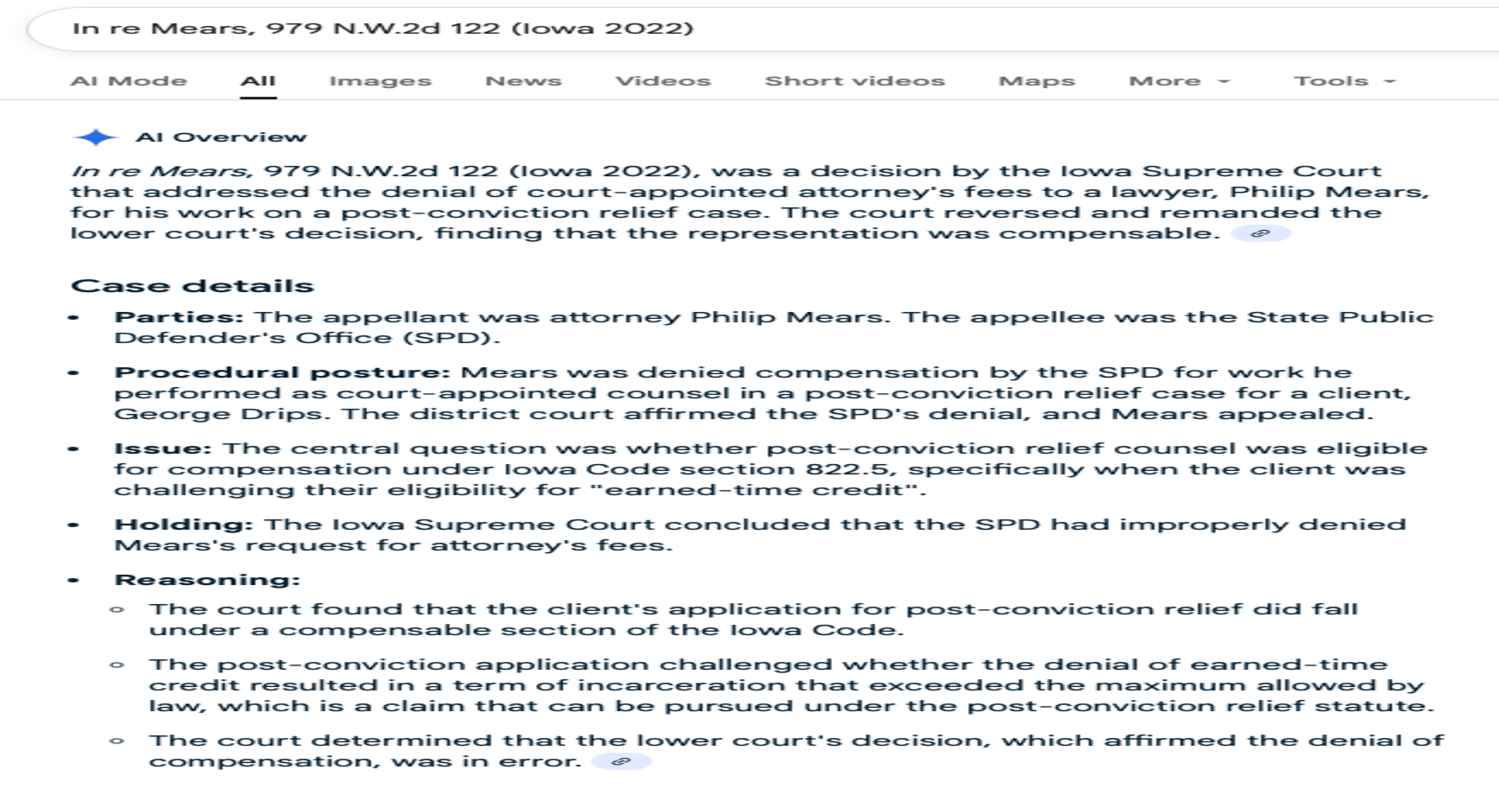

First Google Hallucination

The first time, Google's AI Overview hallucinated an answer stating that the case was a real Iowa Supreme Court decision about court-appoint attorney's fees to a lawyer, but the footnotes linked by Google were actual to Mears v. State Public Defenders Office (2013). Key Takeaway: Just because an LLM puts a footnote next to its claim does not mean the footnote supports the statement.

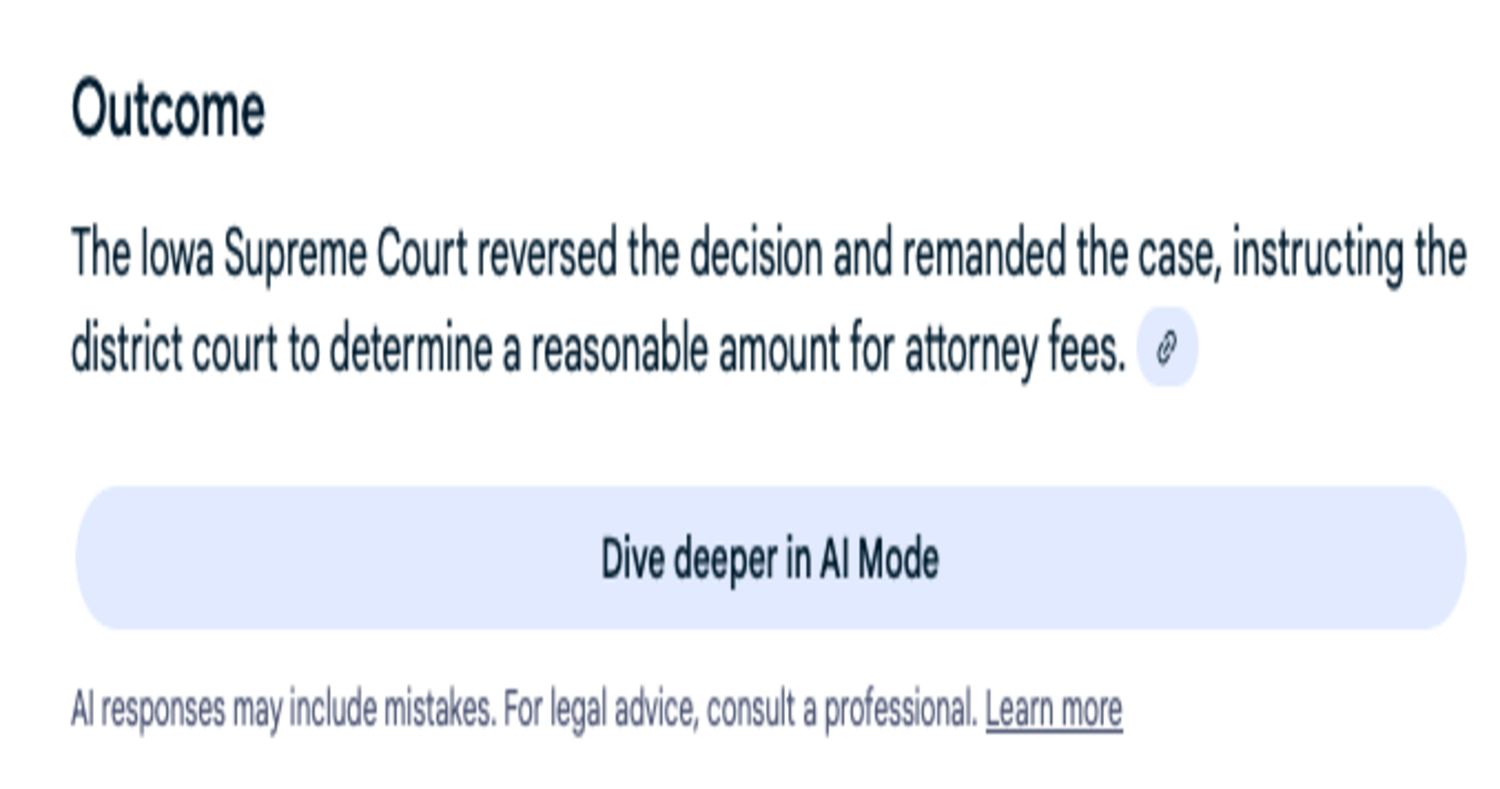

Second Google Hallucination

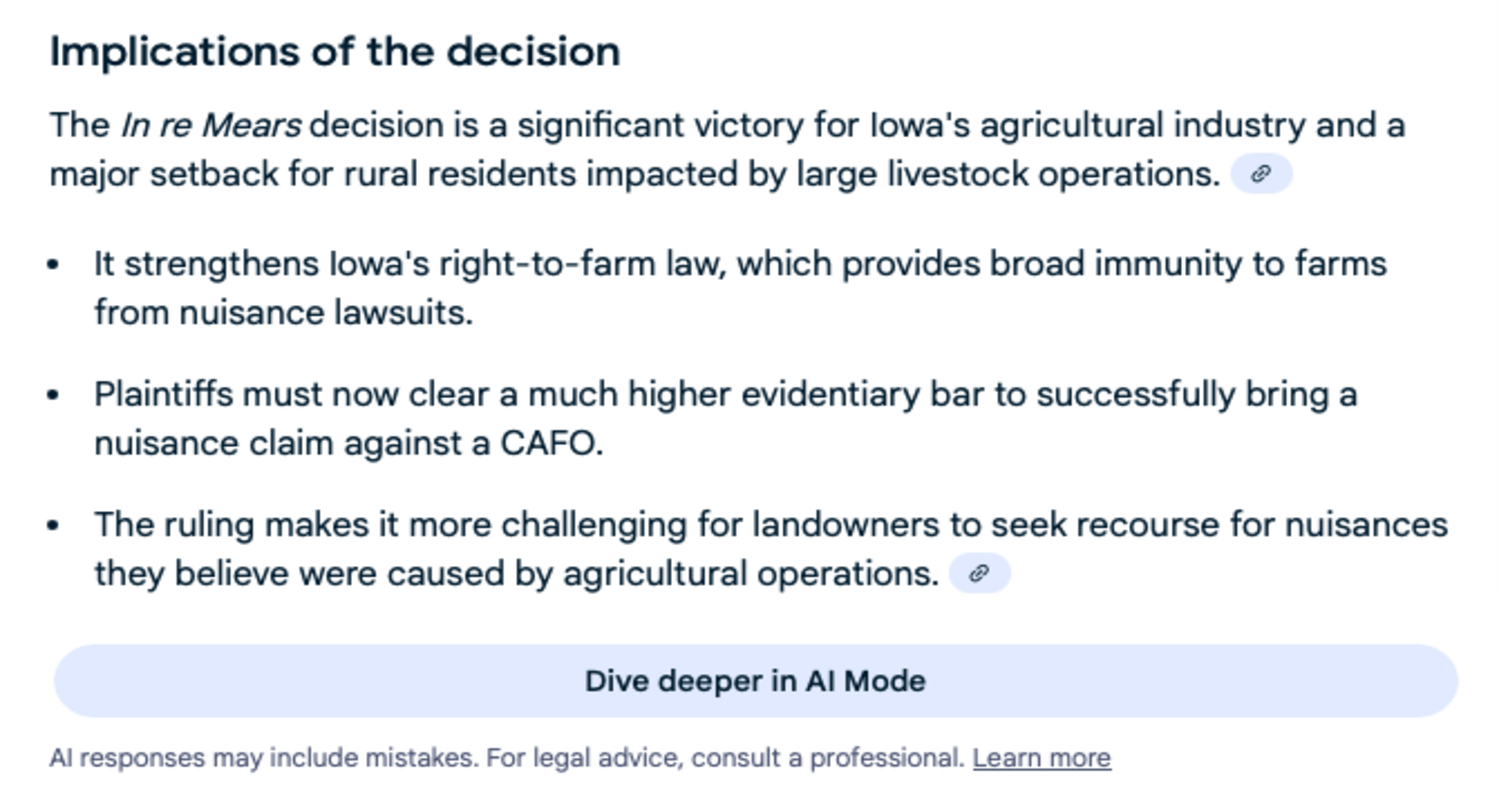

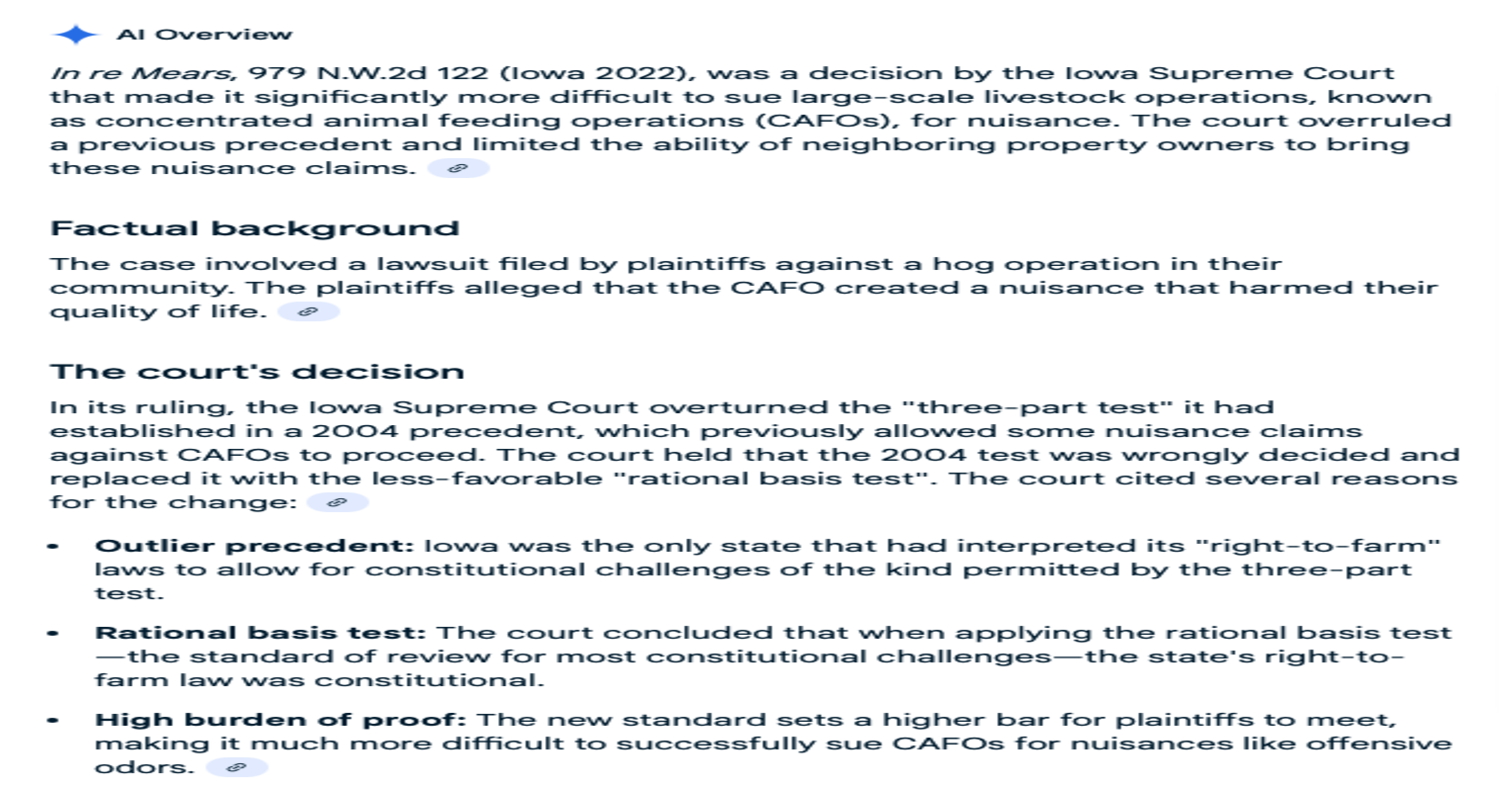

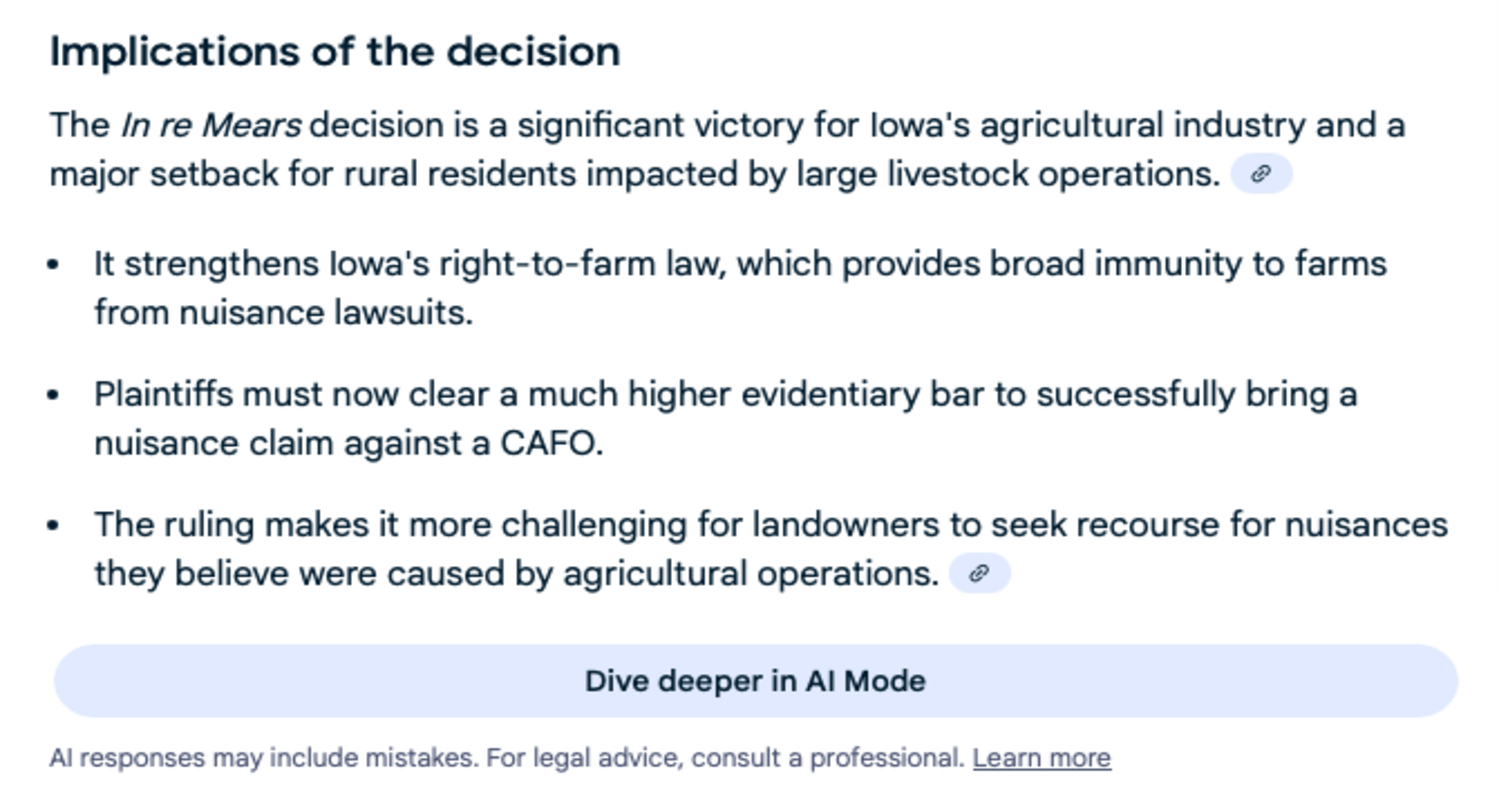

I searched for the same case name again later, to see if Google would warn me that the case did not exist. Instead, it created a different hallucinated summary.

The summary and links related to a 2022 Iowa Supreme Court case, Garrison v. New Fashion Pork LLP, No. 21–0652 (Iowa 2022). Key Takeaway: LLMs are not deterministic and may create different outputs even when given the same inputs.

Perplexity AI’s Comet Browser

Perplexity AI, an AI search engine company, recently released a browser for macOS and Windows to compete with browsers like Chrome, Safari, and Edge. I get a lot of ads for AI stuff on social media, so I've been bombarded with a lot of different content recently promoting Comet. To be frank, most of it is incredibly tasteless to the point that I think parents and educators should reject this product on principle. They are clearly advertising this product to students (including medical students!) telling them Comet will help them cheat on homework. There isn't even the fig leaf of "AI tutoring" or any educational value.

Perplexity’s advertising of Comet is encouraging academic dishonesty, including in the medical profession. You do not want to live in a future full of doctors who were assigned to watch a 42-minute video of a live Heart Transplant and instead “watched in 30s” with Comet AI. Yes, that is literally in one of the Perplexity Comet ads. Perplexity’s ads are also making false claims that are trivial to disprove, like “Comet is like if ChatGPT and Chrome merged but without hallucinations, trash sources, or ads.” Comet hallucinates like any other large language model (LLM)-powered AI tool.

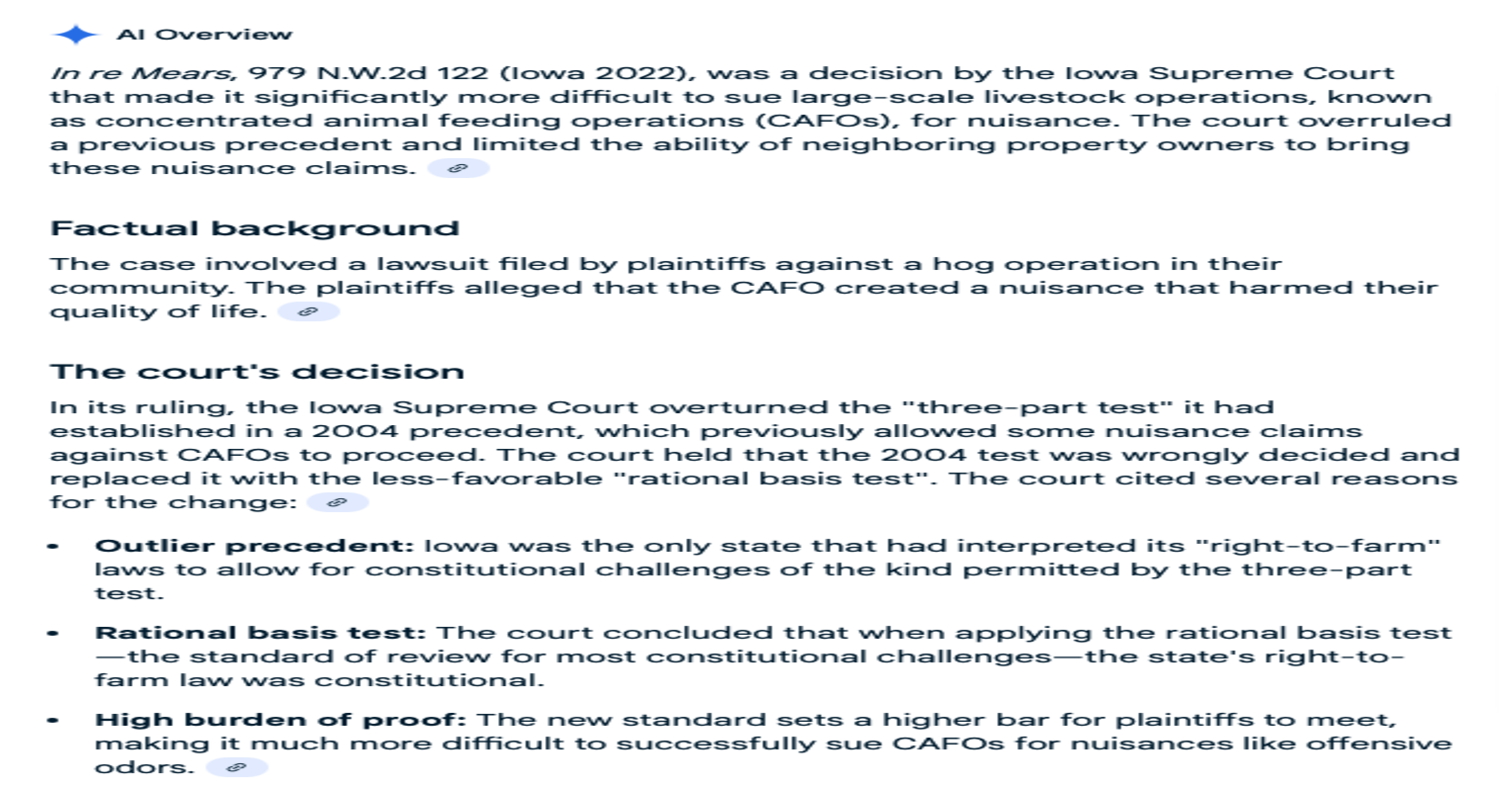

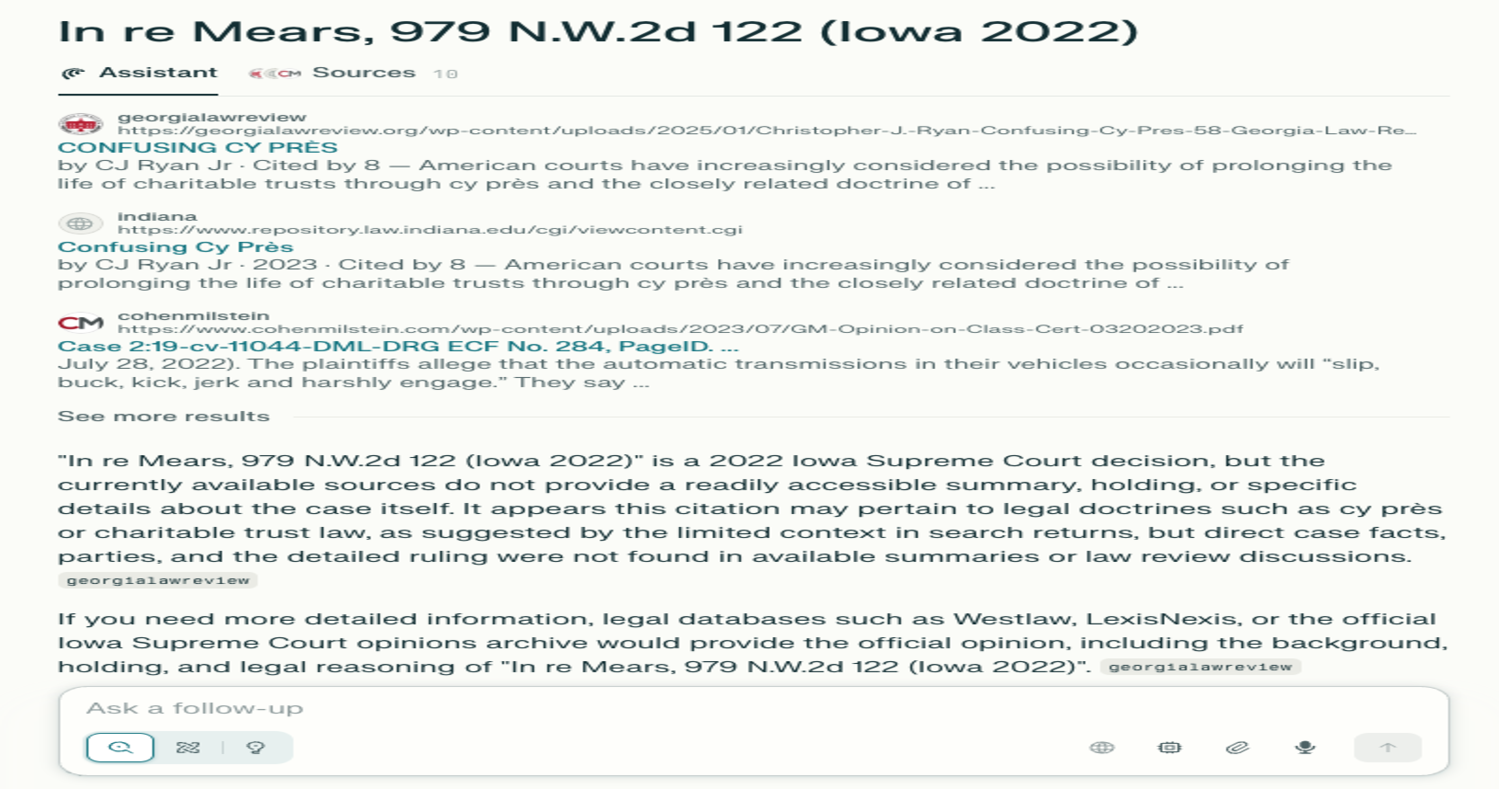

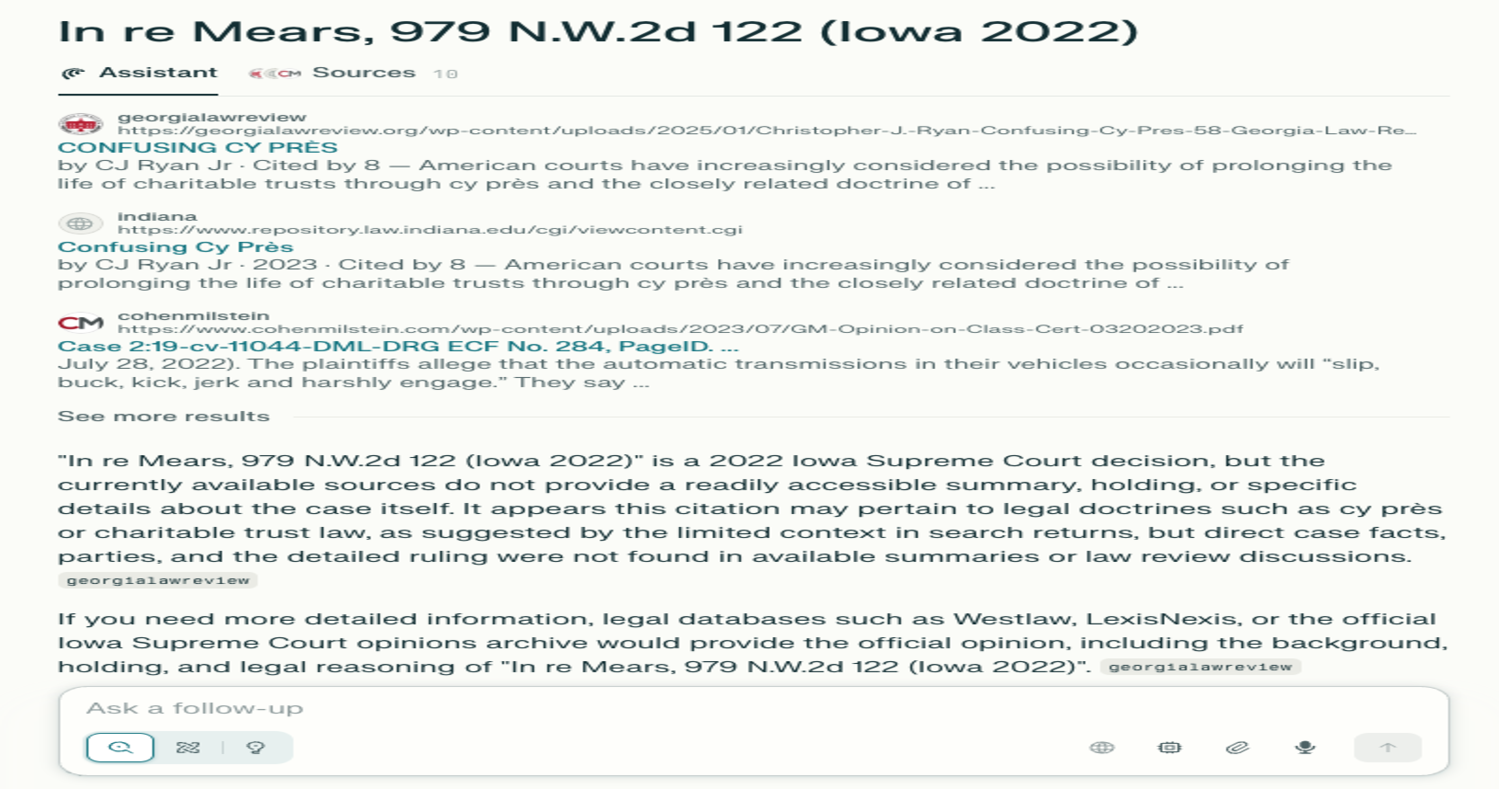

Comet Browser’s Hallucination

I searched for the fake case title “In re Mears, 979 N.W.2d 122 (Iowa 2022)” cited by Turner in a new installation of Comet. It is important to note that people can “game” these types of searches by conducting searches over and over until the AI makes one mistake, then screenshot that mistake to make a point. That is not what I’m doing here. This was the very first result from my first search. It was a hallucination that explicitly stated the fake case “is a 2022 Iowa Supreme Court decision” although this is followed by caveats that cast doubt on whether it really is an existing case:

"In re Mears, 979 N.W.2d 122 (lowa 2022)" is a 2022 lowa Supreme Court decision, but the currently available sources do not provide a readily accessible summary, holding, or specific details about the case itself. It appears this citation may pertain to legal doctrines such as cy près or charitable trust law, as suggested by the limited context in search returns, but direct case facts, parties, and the detailed ruling were not found in available summaries or law review discussions. georgialawreview

If you need more detailed information, legal databases such as Westlaw, LexisNexis, or the official lowa Supreme Court opinions archive would provide the official opinion, including the background, holding, and legal reasoning of "In re Mears, 979 N.W.2d 122 (lowa 2022)".

If you were to follow up on the caveats in the second paragraph, you would learn that the case does not exist. However, this is still a hallucination, because it is describing the case as it if exists and does not mention the one relevant source, In re: Turner, which would tell you that it is a citation to a fake case.